안녕하세요, 쯀리입니다.

오늘은 Backup and Restore Methods에 관해 알아보도록 할게요.

이번강의는 1,2부로 나누어 진행하는데 참고부탁드려요!

https://kubernetes.io/docs/tasks/administer-cluster/configure-upgrade-etcd/

Operating etcd clusters for Kubernetes

etcd is a consistent and highly-available key value store used as Kubernetes' backing store for all cluster data. If your Kubernetes cluster uses etcd as its backing store, make sure you have a back up plan for the data. You can find in-depth information a

kubernetes.io

Kubernetes Backup and Restore Methods

Kubernetes 클러스터에서 백업과 복구 방법은 매우 중요합니다. 이를 통해 데이터를 안전하게 보호하고, 시스템 장애나 데이터 손실 시 신속하게 복구할 수 있습니다. Kubernetes에서 백업 및 복구를 수행하는 몇 가지 일반적인 방법을 소개하겠습니다.

Kubernetes Backup and Restore Methods

- etcd Backup and Restore

- Volume Snapshots

- Application-level Backup

- Third-party Tools

1. etcd Backup and Restore

etcd는 Kubernetes의 핵심 데이터 저장소로, 클러스터의 상태와 구성을 저장합니다. etcd 백업은 클러스터의 상태를 안전하게 보존하는 중요한 방법입니다.

2. Volume Snapshots

스토리지 볼륨의 스냅샷을 사용하여 데이터를 백업하고 복구할 수 있습니다. 이는 Persistent Volume (PV)에 저장된 데이터를 보호하는 데 유용합니다.

3. Application-level Backup

애플리케이션 레벨에서 데이터를 백업하고 복구하는 방법입니다. 데이터베이스와 같은 특정 애플리케이션의 데이터를 백업하는 데 사용됩니다.

4. Third-party Tools

여러 서드파티 도구들이 Kubernetes 백업 및 복구를 지원합니다. 예를 들어, Velero는 Kubernetes 클러스터의 백업 및 복구를 자동화하는 오픈소스 도구입니다.

Quiz.

1. We have a working Kubernetes cluster with a set of web applications running. Let us first explore the setup.

How many deployments exist in the cluster in default namespace?

controlplane ~ ➜ k get deployment

NAME READY UP-TO-DATE AVAILABLE AGE

blue 3/3 3 3 46s

red 2/2 2 2 47s

2. What is the version of ETCD running on the cluster?

Check the ETCD Pod or Process

controlplane ~ ➜ k get pods -A | grep etcd

kube-system etcd-controlplane 1/1 Running 0 10m

ontrolplane ~ ➜ k describe pod etcd-controlplane -n kube-system | grep etcd

...

Annotations: kubeadm.kubernetes.io/etcd.advertise-client-urls: https://192.29.4.9:2379

etcd:

Image: registry.k8s.io/etcd:3.5.12-0

Image ID: registry.k8s.io/etcd@sha256:44a8e24dcbba3470ee1fee21d5e88d128c936e9b55d4bc51fbef8086f8ed123b

etcdImage: registry.k8s.io/etcd:3.5.12-0

3. At what address can you reach the ETCD cluster from the controlplane node?

Check the ETCD Service configuration in the ETCD POD

controlplane ~ ➜ k describe pods etcd-controlplane -n kube-system

...

--listen-client-urls=https://127.0.0.1:2379,https://192.29.4.9:2379

...

https://127.0.0.1:2379

4. Where is the ETCD server certificate file located? Note this path down as you will need to use it later

k describe pod etcd-controlplane -n kube-system

...

-- cert-file=/etc/kubernetes/pki/etcd/server.crt

....

Volumes:

etcd-certs:

Type: HostPath (bare host directory volume)

Path: /etc/kubernetes/pki/etcd

HostPathType: DirectoryOrCreate

etcd-data:

Type: HostPath (bare host directory volume)

Path: /var/lib/etcd

HostPathType: DirectoryOrCreateetcd-certs 는 /etc/kubernetes/pki/etcd 이곳에 위치하고 있습니다. 파일명은 server.crt

5. Where is the ETCD CA Certificate file located?

controlplane ~ ➜ k describe pod etcd-controlplane -n kube-system | grep ca.crt

--peer-trusted-ca-file=/etc/kubernetes/pki/etcd/ca.crt

--trusted-ca-file=/etc/kubernetes/pki/etcd/ca.crt/etc/kubernetes/pki/etcd/ca.crt

6. The master node in our cluster is planned for a regular maintenance reboot tonight. While we do not anticipate anything to go wrong, we are required to take the necessary backups. Take a snapshot of the ETCD database using the built-in snapshot functionality.

Store the backup file at location /opt/snapshot-pre-boot.db

ETCDCTL_API=3 etcdctl --endpoints=https://[127.0.0.1]:2379 \

--cacert=/etc/kubernetes/pki/etcd/ca.crt \

--cert=/etc/kubernetes/pki/etcd/server.crt \

--key=/etc/kubernetes/pki/etcd/server.key \

snapshot save /opt/snapshot-pre-boot.db

Snapshot saved at /opt/snapshot-pre-boot.dbdocument 참고자료: https://kubernetes.io/docs/tasks/administer-cluster/configure-upgrade-etcd/

9. Wake up! We have a conference call! After the reboot the master nodes came back online, but none of our applications are accessible. Check the status of the applications on the cluster. What's wrong?

모두 조회가 되지않는것을 볼 수 있습니다.

controlplane ~ ➜ k get pods

No resources found in default namespace.

controlplane ~ ➜ k get deploy

No resources found in default namespace.

controlplane ~ ➜ k get services

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 2m7s

10. Luckily we took a backup. Restore the original state of the cluster using the backup file.

1. 8번에서 진행햇던 snapshot을 가져옵니다.

ETCDCTL_API=3 etcdctl --endpoints=https://[127.0.0.1]:2379 --cacert=/etc/kubernetes/pki/etcd/ca.crt \

--cert=/etc/kubernetes/pki/etcd/server.crt --key=/etc/kubernetes/pki/etcd/server.key \

--data-dir /var/lib/etcd-from-backup \

snapshot restore /opt/snapshot-pre-boot.db

2. yaml파일 수정

controlplane ~ ✖ cd /etc/kubernetes/manifests

controlplane /etc/kubernetes/manifests ➜ ls

etcd.yaml kube-apiserver.yaml kube-controller-manager.yaml kube-scheduler.yaml

controlplane /etc/kubernetes/manifests ➜ vi etcd.yaml해당 파일이 수정되면 자동으로 pods와 service, deployments 들이 생성됩니다.

## asis

..

volumes:

- hostPath:

path: /var/lib/etcd

type: DirectoryOrCreate

name: etcd-data

status: {}

## tobe

....

volumes:

- hostPath:

path: /var/lib/etcd-from-backup

type: DirectoryOrCreate

name: etcd-data

status: {}

확인

controlplane ~ ➜ k get pods

NAME READY STATUS RESTARTS AGE

blue-fffb6db8d-5pkpb 1/1 Running 0 25m

blue-fffb6db8d-8p8wz 1/1 Running 0 25m

blue-fffb6db8d-xhb57 1/1 Running 0 25m

red-85c9fd5d6f-85xzt 1/1 Running 0 25m

red-85c9fd5d6f-95fhc 1/1 Running 0 25m

controlplane ~ ➜ k get deployment

NAME READY UP-TO-DATE AVAILABLE AGE

blue 3/3 3 3 25m

red 2/2 2 2 25m

controlplane ~ ➜ k get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

blue-service NodePort 10.96.28.222 <none> 80:30082/TCP 25m

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 31m

red-service NodePort 10.101.140.90 <none> 80:30080/TCP 25m

Quiz2.

1. In this lab environment, you will get to work with multiple kubernetes clusters where we will practice backing up and restoring the ETCD database.

2. You will notice that, you are logged in to the student-node (instead of the controlplane).

The student-node has the kubectl client and has access to all the Kubernetes clusters that are configured in this lab environment.

Before proceeding to the next question, explore the student-node and the clusters it has access to.

student-node ~ ➜ k get nodes

NAME STATUS ROLES AGE VERSION

cluster1-controlplane Ready control-plane 36m v1.24.0

cluster1-node01 Ready <none> 36m v1.24.0

student-node ~ ➜ k get deploy

No resources found in default namespace.

3. How many clusters are defined in the kubeconfig on the student-node?

You can make use of the kubectl config command.

student-node ~ ✖ k config view

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: DATA+OMITTED

server: https://cluster1-controlplane:6443

name: cluster1

- cluster:

certificate-authority-data: DATA+OMITTED

server: https://192.30.120.9:6443

name: cluster2

contexts:

- context:

cluster: cluster1

user: cluster1

name: cluster1

- context:

cluster: cluster2

user: cluster2

name: cluster2

current-context: cluster1

kind: Config

preferences: {}

users:

- name: cluster1

user:

client-certificate-data: REDACTED

client-key-data: REDACTED

- name: cluster2

user:

client-certificate-data: REDACTED

client-key-data: REDACTEDcluster1, cluster2 로 이루어져있는것을 확인할 수 있습니다.

4. How many nodes (both controlplane and worker) are part of cluster1?

Make sure to switch the context to cluster1:

kubectl config use-context cluster1

cluster1에는 node가 2개로 이루어져있습니다.

student-node ~ ➜ k get nodes

NAME STATUS ROLES AGE VERSION

cluster1-controlplane Ready control-plane 39m v1.24.0

cluster1-node01 Ready <none> 39m v1.24.0

5. What is the name of the controlplane node in cluster2?

Make sure to switch the context to cluster2:

student-node ~ ➜ k config use-context cluster2

Switched to context "cluster2".

student-node ~ ➜ k get nodes

NAME STATUS ROLES AGE VERSION

cluster2-controlplane Ready control-plane 40m v1.24.0

cluster2-node01 Ready <none> 40m v1.24.0cluster2-controlplane

6. You can SSH to all the nodes (of both clusters) from the student-node.

For example :

student-node ~ ➜ ssh cluster1-controlplane

Welcome to Ubuntu 18.04.6 LTS (GNU/Linux 5.4.0-1086-gcp x86_64)

* Documentation: https://help.ubuntu.com

* Management: https://landscape.canonical.com

* Support: https://ubuntu.com/advantage

This system has been minimized by removing packages and content that are

not required on a system that users do not log into.

To restore this content, you can run the 'unminimize' command.

cluster1-controlplane ~ ➜

## 나올때는

cluster1-controlplane ~ -> exit 또는 logout

7. How is ETCD configured for cluster1?

Remember, you can access the clusters from student-node using the kubectl tool. You can also ssh to the cluster nodes from the student-node.

Make sure to switch the context to cluster1:

student-node ~ ➜ k get pods -A -o wide| grep etcd

kube-system etcd-cluster1-controlplane 1/1 Running 0 43m 192.30.120.6 cluster1-controlplane <none> <none>즉, ETCD는 제어 플레인 구성 요소를 실행하는 kubeadm이 관리하는 노드로 구성된 클러스터 위에 etcd가 제공하는 분산 데이터 스토리지 클러스터가 스택되는 스택형 ETCD 토폴로지로 설정됩니다.

Stacked ETCD

8. How is ETCD configured for cluster2?

Make sure to switch the context to cluster2:

student-node ~ ➜ kubectl config use-context cluster2

Switched to context "cluster2".

student-node ~ ➜ k get pods -A | grep etcdCluster2번에는 따로 설정된 Stacked ETCD가 없습니다.

그렇다면 ssh로 접속해서 서버에 설정되어 있는 것이 있는지 확인해보겠습니다.

student-node ~ ✖ k get nodes

NAME STATUS ROLES AGE VERSION

cluster2-controlplane Ready control-plane 49m v1.24.0

cluster2-node01 Ready <none> 48m v1.24.0

student-node ~ ➜ ssh cluster2-controlplane

Welcome to Ubuntu 18.04.6 LTS (GNU/Linux 5.4.0-1106-gcp x86_64)

* Documentation: https://help.ubuntu.com

* Management: https://landscape.canonical.com

* Support: https://ubuntu.com/advantage

This system has been minimized by removing packages and content that are

not required on a system that users do not log into.

To restore this content, you can run the 'unminimize' command.

Last login: Fri Jul 12 15:44:42 2024 from 192.30.120.4

cluster2-controlplane ~ ➜ ls /etc/kubernetes/manifests/

kube-apiserver.yaml kube-controller-manager.yaml kube-scheduler.yaml

cluster2-controlplane ~ ➜ ps -ef | grep etcd

root 1750 1334 0 14:56 ? 00:02:01 kube-apiserver --advertise-address=192.30.120.9 --allow-privileged=true --authorization-mode=Node,RBAC --client-ca-file=/etc/kubernetes/pki/ca.crt --enable-admission-plugins=NodeRestriction --enable-bootstrap-token-auth=true --etcd-cafile=/etc/kubernetes/pki/etcd/ca.pem --etcd-certfile=/etc/kubernetes/pki/etcd/etcd.pem --etcd-keyfile=/etc/kubernetes/pki/etcd/etcd-key.pem --etcd-servers=https://192.30.120.21:2379 --kubelet-client-certificate=/etc/kubernetes/pki/apiserver-kubelet-client.crt --kubelet-client-key=/etc/kubernetes/pki/apiserver-kubelet-client.key --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname --proxy-client-cert-file=/etc/kubernetes/pki/front-proxy-client.crt --proxy-client-key-file=/etc/kubernetes/pki/front-proxy-client.key --requestheader-allowed-names=front-proxy-client --requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.crt --requestheader-extra-headers-prefix=X-Remote-Extra- --requestheader-group-headers=X-Remote-Group --requestheader-username-headers=X-Remote-User --secure-port=6443 --service-account-issuer=https://kubernetes.default.svc.cluster.local --service-account-key-file=/etc/kubernetes/pki/sa.pub --service-account-signing-key-file=/etc/kubernetes/pki/sa.key --service-cluster-ip-range=10.96.0.0/12 --tls-cert-file=/etc/kubernetes/pki/apiserver.crt --tls-private-key-file=/etc/kubernetes/pki/apiserver.key

root 8408 8224 0 15:47 pts/1 00:00:00 grep etcd모두 확인후 ps -ef | grep etcd 시 보여지는 것으로 보아 외부 etcd가 설정 되어있는 것을 확인할 수있습니다.

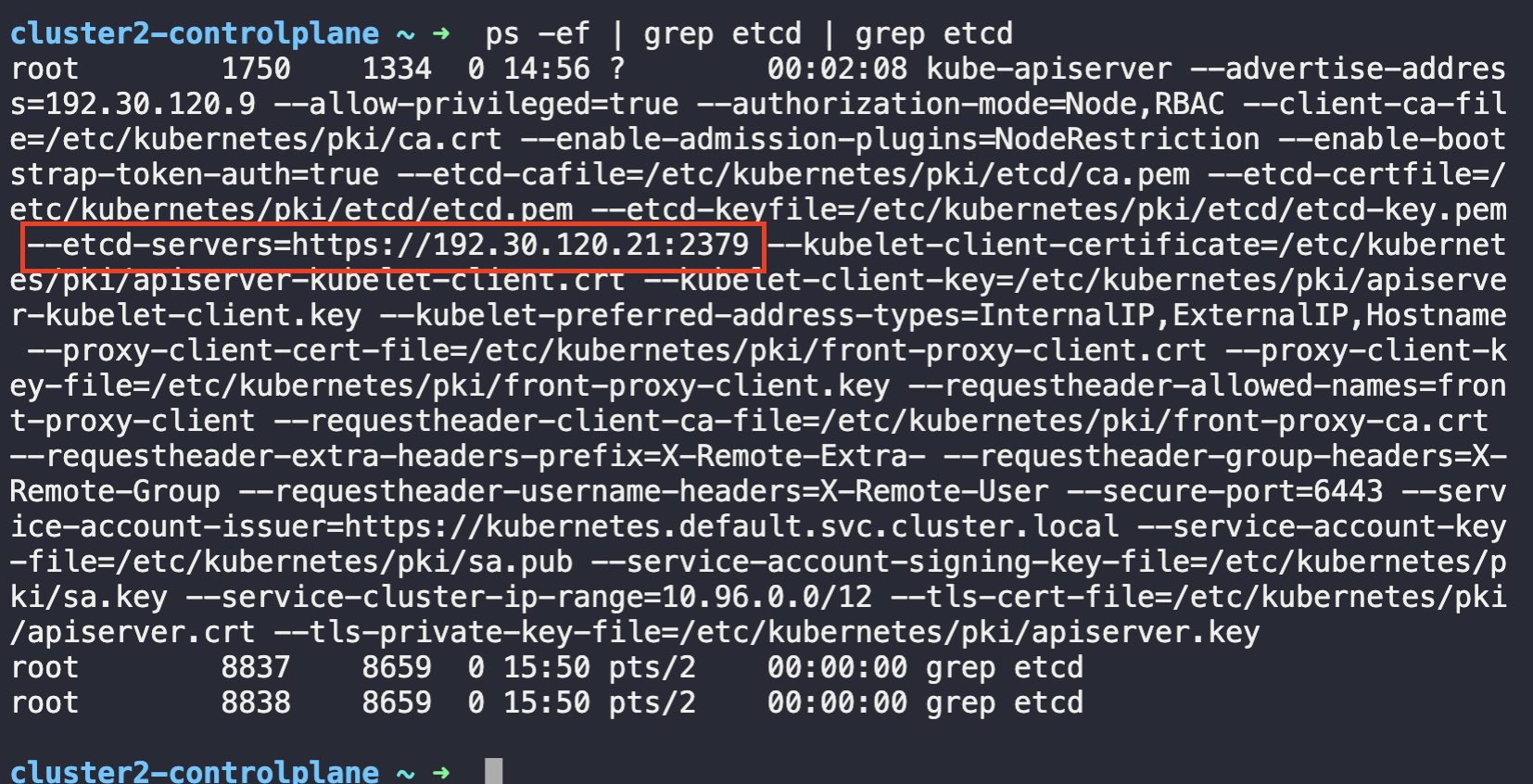

9. What is the IP address of the External ETCD datastore used in cluster2?

cluster2-controlplane ~ ➜ ps -ef | grep etcd | grep etcd

root 1750 1334 0 14:56 ? 00:02:08 kube-apiserver --advertise-address=192.30.120.9 --allow-privileged=true --authorization-mode=Node,RBAC --client-ca-file=/etc/kubernetes/pki/ca.crt --enable-admission-plugins=NodeRestriction --enable-bootstrap-token-auth=true --etcd-cafile=/etc/kubernetes/pki/etcd/ca.pem --etcd-certfile=/etc/kubernetes/pki/etcd/etcd.pem --etcd-keyfile=/etc/kubernetes/pki/etcd/etcd-key.pem --etcd-servers=https://192.30.120.21:2379 --kubelet-client-certificate=/etc/kubernetes/pki/apiserver-kubelet-client.crt --kubelet-client-key=/etc/kubernetes/pki/apiserver-kubelet-client.key --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname --proxy-client-cert-file=/etc/kubernetes/pki/front-proxy-client.crt --proxy-client-key-file=/etc/kubernetes/pki/front-proxy-client.key --requestheader-allowed-names=front-proxy-client --requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.crt --requestheader-extra-headers-prefix=X-Remote-Extra- --requestheader-group-headers=X-Remote-Group --requestheader-username-headers=X-Remote-User --secure-port=6443 --service-account-issuer=https://kubernetes.default.svc.cluster.local --service-account-key-file=/etc/kubernetes/pki/sa.pub --service-account-signing-key-file=/etc/kubernetes/pki/sa.key --service-cluster-ip-range=10.96.0.0/12 --tls-cert-file=/etc/kubernetes/pki/apiserver.crt --tls-private-key-file=/etc/kubernetes/pki/apiserver.key

root 8837 8659 0 15:50 pts/2 00:00:00 grep etcd

192.30.120.21:2379

10. What is the default data directory used the for ETCD datastore used in cluster1?

Remember, this cluster uses a Stacked ETCD topology.

Make sure to switch the context to cluster1:

student-node ~ ✖ k get pods -A | grep etcd

kube-system etcd-cluster1-controlplane 1/1 Running 0 55m

student-node ~ ✖ k describe pods etcd-cluster1-controlplane -n kube-system

Name: etcd-cluster1-controlplane

...

Volumes:

etcd-certs:

Type: HostPath (bare host directory volume)

Path: /etc/kubernetes/pki/etcd

HostPathType: DirectoryOrCreate

etcd-data:

Type: HostPath (bare host directory volume)

Path: /var/lib/etcd

HostPathType: DirectoryOrCreate

QoS Class: Burstable

Node-Selectors: <none>

Tolerations: :NoExecute op=Exists

Events: <none>/var/lib/etcd가 기본 etcd-data 디렉터리로 설정되어있습니다.

11. For the subsequent questions, you would need to login to the External ETCD server.

To do this, open a new terminal (using the + button located above the default terminal).

From the new terminal you can now SSH from the student-node to either the IP of the ETCD datastore (that you identified in the previous questions) OR the hostname etcd-server:

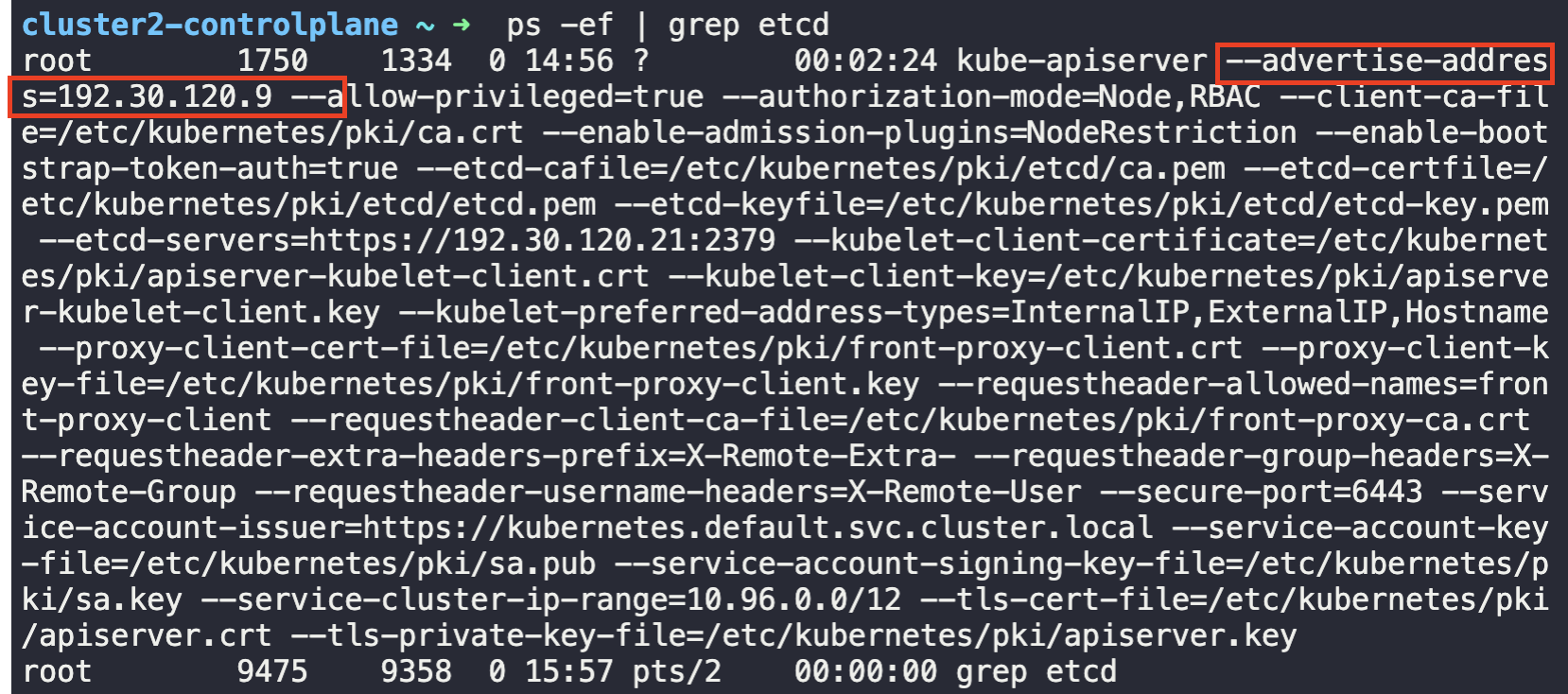

외부에서도 접속이 되는지 확인해보겠습니다.

student-node ~ ✖ k config use-context cluster2

Switched to context "cluster2".

student-node ~ ➜ ssh cluster2-controlplane

Welcome to Ubuntu 18.04.6 LTS (GNU/Linux 5.4.0-1106-gcp x86_64)

* Documentation: https://help.ubuntu.com

* Management: https://landscape.canonical.com

* Support: https://ubuntu.com/advantage

This system has been minimized by removing packages and content that are

not required on a system that users do not log into.

To restore this content, you can run the 'unminimize' command.

Last login: Fri Jul 12 15:49:43 2024 from 192.30.120.4

cluster2-controlplane ~ ➜ ps -ef | grep etcd

root 1750 1334 0 14:56 ? 00:02:24 kube-apiserver --advertise-address=192.30.120.9 --allow-privileged=true --authorization-mode=Node,RBAC --client-ca-file=/etc/kubernetes/pki/ca.crt --enable-admission-plugins=NodeRestriction --enable-bootstrap-token-auth=true --etcd-cafile=/etc/kubernetes/pki/etcd/ca.pem --etcd-certfile=/etc/kubernetes/pki/etcd/etcd.pem --etcd-keyfile=/etc/kubernetes/pki/etcd/etcd-key.pem --etcd-servers=https://192.30.120.21:2379 --kubelet-client-certificate=/etc/kubernetes/pki/apiserver-kubelet-client.crt --kubelet-client-key=/etc/kubernetes/pki/apiserver-kubelet-client.key --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname --proxy-client-cert-file=/etc/kubernetes/pki/front-proxy-client.crt --proxy-client-key-file=/etc/kubernetes/pki/front-proxy-client.key --requestheader-allowed-names=front-proxy-client --requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.crt --requestheader-extra-headers-prefix=X-Remote-Extra- --requestheader-group-headers=X-Remote-Group --requestheader-username-headers=X-Remote-User --secure-port=6443 --service-account-issuer=https://kubernetes.default.svc.cluster.local --service-account-key-file=/etc/kubernetes/pki/sa.pub --service-account-signing-key-file=/etc/kubernetes/pki/sa.key --service-cluster-ip-range=10.96.0.0/12 --tls-cert-file=/etc/kubernetes/pki/apiserver.crt --tls-private-key-file=/etc/kubernetes/pki/apiserver.key

root 9475 9358 0 15:57 pts/2 00:00:00 grep etcd

cluster2-controlplane ~ ➜ exit

logout

Connection to cluster2-controlplane closed.

student-node ~ ➜ ssh 192.30.120.9

Welcome to Ubuntu 18.04.6 LTS (GNU/Linux 5.4.0-1106-gcp x86_64)

* Documentation: https://help.ubuntu.com

* Management: https://landscape.canonical.com

* Support: https://ubuntu.com/advantage

This system has been minimized by removing packages and content that are

not required on a system that users do not log into.

To restore this content, you can run the 'unminimize' command.

Last login: Fri Jul 12 15:57:21 2024 from 192.30.120.4

cluster2-controlplane ~ ➜접속하려는 서버의 IP는 ps -ef | grep etcd시

12.What is the default data directory used the for ETCD datastore used in cluster2?

Remember, this cluster uses an External ETCD topology.

etcd-server ~ ➜ ps -ef | grep etcd

etcd 819 1 0 14:56 ? 00:01:24 /usr/local/bin/etcd --name etcd-server --data-dir=/var/lib/etcd-data --cert-file=/etc/etcd/pki/etcd.pem --key-file=/etc/etcd/pki/etcd-key.pem --peer-cert-file=/etc/etcd/pki/etcd.pem --peer-key-file=/etc/etcd/pki/etcd-key.pem --trusted-ca-file=/etc/etcd/pki/ca.pem --peer-trusted-ca-file=/etc/etcd/pki/ca.pem --peer-client-cert-auth --client-cert-auth --initial-advertise-peer-urls https://192.30.120.21:2380 --listen-peer-urls https://192.30.120.21:2380 --advertise-client-urls https://192.30.120.21:2379 --listen-client-urls https://192.30.120.21:2379,https://127.0.0.1:2379 --initial-cluster-token etcd-cluster-1 --initial-cluster etcd-server=https://192.30.120.21:2380 --initial-cluster-state new

root 1068 991 0 16:07 pts/0 00:00:00 grep etcd--data-dir=/var/lib/etcd-data

13. How many nodes are part of the ETCD cluster that etcd-server is a part of?

etcd-server ~ ➜ ETCDCTL_API=3 etcdctl \

> --endpoints=https://127.0.0.1:2379 \

> --cacert=/etc/etcd/pki/ca.pem \

> --cert=/etc/etcd/pki/etcd.pem \

> --key=/etc/etcd/pki/etcd-key.pem \

> member list

575be90dffadd259, started, etcd-server, https://192.30.120.21:2380, https://192.30.120.21:2379, false멤버는 575be90dffadd259 한개의 노드가 확인됩니다.

14, Take a backup of etcd on cluster1 and save it on the student-node at the path /opt/cluster1.db

If needed, make sure to set the context to cluster1 (on the student-node):

etcd환경 확인

student-node ~ ➜ kubectl describe pods -n kube-system etcd-cluster1-controlplane | grep advertise-client-urls

Annotations: kubeadm.kubernetes.io/etcd.advertise-client-urls: https://192.30.120.6:2379

--advertise-client-urls=https://192.30.120.6:2379

student-node ~ ➜ kubectl describe pods -n kube-system etcd-cluster1-controlplane | grep pki

--cert-file=/etc/kubernetes/pki/etcd/server.crt

--key-file=/etc/kubernetes/pki/etcd/server.key

--peer-cert-file=/etc/kubernetes/pki/etcd/peer.crt

--peer-key-file=/etc/kubernetes/pki/etcd/peer.key

--peer-trusted-ca-file=/etc/kubernetes/pki/etcd/ca.crt

--trusted-ca-file=/etc/kubernetes/pki/etcd/ca.crt

/etc/kubernetes/pki/etcd from etcd-certs (rw)

Path: /etc/kubernetes/pki/etcd

SSH로 cluster1의 컨트롤 플레인 노드에 접속한 다음 위에서 식별한 엔드포인트와 인증서를 사용하여 백업을 수행합니다:

ETCDCTL_API=3 etcdctl --endpoints=https://127.0.0.1:2379 --cacert=/etc/kubernetes/pki/etcd/ca.crt --cert=/etc/kubernetes/pki/etcd/server.crt --key=/etc/kubernetes/pki/etcd/server.key snapshot save /opt/cluster1.db

Snapshot saved at /opt/cluster1.db

해당 cluster에서 나와 student-node 로 복사해줍니다,.

cluster1-controlplane ~ ➜ exit

logout

Connection to cluster1-controlplane closed.

student-node ~ ➜ scp cluster1-controlplane:/opt/cluster1.db /opt

cluster1.db 100% 2100KB 114.2MB/s 00:00

15. An ETCD backup for cluster2 is stored at /opt/cluster2.db. Use this snapshot file to carryout a restore on cluster2 to a new path /var/lib/etcd-data-new.

Once the restore is complete, ensure that the controlplane components on cluster2 are running.

The snapshot was taken when there were objects created in the critical namespace on cluster2. These objects should be available post restore.

If needed, make sure to set the context to cluster2 (on the student-node):

14번 문제에서 백업해둔 파일을 External ETCD cluster 로 scp를 이용하여 복사해줍니다.

scp /opt/cluster2.db etcd-server:/root

- etcd-server 로 이동해서 해당 파일을 이용해서 restore를 실행합니다.

student-node ~ ✖ ssh etcd-server

Welcome to Ubuntu 18.04.6 LTS (GNU/Linux 5.4.0-1106-gcp x86_64)

* Documentation: https://help.ubuntu.com

* Management: https://landscape.canonical.com

* Support: https://ubuntu.com/advantage

This system has been minimized by removing packages and content that are

not required on a system that users do not log into.

To restore this content, you can run the 'unminimize' command.

Last login: Fri Jul 12 16:08:20 2024 from 192.30.120.3

etcd-server ~ ➜ ETCDCTL_API=3 etcdctl --endpoints=https://127.0.0.1:2379 --cacert=/etc/etcd/pki/ca.pem --cert=/etc/etcd/pki/etcd.pem --key=/etc/etcd/pki/etcd-key.pem snapshot restore /root/cluster2.db --data-dir /var/lib/etcd-data-new

{"level":"info","ts":1720801324.4161801,"caller":"snapshot/v3_snapshot.go:296","msg":"restoring snapshot","path":"/root/cluster2.db","wal-dir":"/var/lib/etcd-data-new/member/wal","data-dir":"/var/lib/etcd-data-new","snap-dir":"/var/lib/etcd-data-new/member/snap"}

{"level":"info","ts":1720801324.433792,"caller":"mvcc/kvstore.go:388","msg":"restored last compact revision","meta-bucket-name":"meta","meta-bucket-name-key":"finishedCompactRev","restored-compact-revision":6341}

{"level":"info","ts":1720801324.4411652,"caller":"membership/cluster.go:392","msg":"added member","cluster-id":"cdf818194e3a8c32","local-member-id":"0","added-peer-id":"8e9e05c52164694d","added-peer-peer-urls":["http://localhost:2380"]}

{"level":"info","ts":1720801324.4817395,"caller":"snapshot/v3_snapshot.go:309","msg":"restored snapshot","path":"/root/cluster2.db","wal-dir":"/var/lib/etcd-data-new/member/wal","data-dir":"/var/lib/etcd-data-new","snap-dir":"/var/lib/etcd-data-new/member/snap"}

data-dir를 수정해주겠습니다 : [to be ]: /var/lib/etcd-data-new

etcd-server ~ ➜ cd /etc/systemd/system/

etcd-server /etc/systemd/system ➜ vi etcd.service

...

--data-dir=/var/lib/etcd-data-new \

..

변경된 directory가 권한이 있는지 확인하고 변경해주겠습니다.

etcd-server /var/lib ➜ ls -al | grep etcd-data-new

drwx------ 3 root root 4096 Jul 12 16:22 etcd-data-new

etcd-server /var/lib ➜ chown -R etcd:etcd /var/lib/etcd-data-new

etcd-server /var/lib ➜ ls -al | grep etcd-data-new

drwx------ 3 etcd etcd 4096 Jul 12 16:22 etcd-data-new

해당 서버 재기동

etcd-server ~ ➜ systemctl daemon-reload

etcd-server ~ ➜ systemctl restart etcd오늘은 굉장히 긴 Quiz였는데요. 어렵지만 꼭 배워보기 좋았던 장이였던 것 같습니다.

다음번에는 5장에서 볼게요!

참조

※ Udemy Labs - Certified Kubernetes Administrator with Practice Tests

'IT 잡지식 > DevOps' 카테고리의 다른 글

| [CKA] KodeKloud - Certificates API (0) | 2024.07.18 |

|---|---|

| [CKA] KodeKloud - View Certificate Details (5) | 2024.07.15 |

| [CKA] KodeKloud - Cluster Upgrade Process (0) | 2024.07.12 |

| [CKA] KodeKloud - OS Upgrade (0) | 2024.07.11 |

| [CKA] KodeKloud - Multi Container PODs (0) | 2024.07.05 |